We perform teaching and research in machine learning strategies for the pattern analysis of various kinds of data. This comprises statistical models for clustering, graphical models for network inference and algorithmic methods to efficiently find these structures in the data.

We perform teaching and research in machine learning strategies for the pattern analysis of various kinds of data. This comprises statistical models for clustering, graphical models for network inference and algorithmic methods to efficiently find these structures in the data.

Research

We work on some of the most complex and interesting challenges in AI.

Mission Statement

The Analysis of Patterns in data poses one of the central problems in natural science and engineering today. Large scale experiments and computer simulations produce a huge amount of highly complex data, e.g., in proteomics of the cell, remote sensing or acoustic and visual scene analysis.

The Machine Learning methodology extracts hidden structures from these data sources with the help of a teacher signal or often also without any supervision. For example, the mass spectra from thousands of molecular biology experiments are exploited to train complex probabilistic hidden Markov models for peptide sequencing - a data analysis approach pioneered in our group. As another example, combinations of image patches, edges and texture features are grouped by graphical models to train vision systems for image categorization and scene understanding. All these statistical models are determined by optimization algorithms which should reliably predict the hidden patterns despite experimental noise. The challenge is to develop algorithms with good generalization performance rather than greedy minimizers of empirical costs.

We currently study statistical models for data clustering, graphical models for network inference and algorithmic methods to efficiently find these structures in the data. A major effort of our algorithmic and modeling work is devoted to quantify the robustness of the learned structures, i.e., to provide the data analyst with uncertainty estimates of models and methods.

Our Focus Areas

Statistical Learning Theory

We study the statistical and algorithmic principles behind learning from various kinds of data. Model selection and the notion of model complexities are key issues in our research.

Inverse Reinforcement Learning

A principled approach in reinforcement learning to design of an appropriate reward function is to tackle the issue by inferring the reward function from recorded demonstrations performed by experts assumed to be acting optimally in a particular environment, thus performing the desired behaviour.

Domain Generalisation

Identifying the right invariance that allows for generalization to utterly unseen domains is crucial for robust deployment of the models in practice and combating distributional shift. We study invariant structures in the solution space that enable knowledge transfer.

Interpretable Machine Learning

Interpretable ML aims to render model behavior understandable by humans, which lies at the heart of human-machine interaction. We are utilizing these explanations for model debugging & fairness, robustness and knowledge discovery.

Current Projects

Personalised 3D Heart

Echocardiography monitors heart movements for noninvasive diagnoses of heart diseases. It produces high-dimensional video data with substantial stochasticity in the measurements, which frequently prove difficult to interpret. To address this challenge, we propose an automated framework to enable the inference of a high resolution personalized 4D (3D plus time) surface mesh of cardiac structures from 2D echocardiography video data. The visualization of patient-specific 3D models of the beating heart is of fundamental importance in precision medicine. It enables automated assessment of the cardiac morphology and, consequently, permits early treatment for cardiovascular (CV) diseases. CV diseases are the most prevalent cause of deaths worldwide. Currently, most of the developed machine learning algorithms for medical applications are black-box models and do not provide useful insights into their prediction mechanism. On the other hand, such heart models enable an automated and robust measurement of various clinical variables, while at the same time, remaining interpretable, which is essential to build trust in machine learning based algorithms. They cannot only be employed to automate echocardiography measurements but will emerge as a valuable tool to discover novel reliable features for the diagnosis of pathologies. To tackle this challenging problem, we push state of the art research in 3D shape reconstruction from 2D images, in our case echocardiography videos, by leveraging ideas from generative modeling in combination with differentiable rendering approaches.

ML in Dental Imaging

The dental panoramic X-Ray (or more commonly OPG) is a panoramic scanning of the upper and lower jaw. This is a frequent routine exam within the context of dental care, with its indication ranging from evaluation of third molar evaluation to routine orthodontic assessment.

Root development and interaction with alveolar nerve

One of the primary uses for OPG images is the evaluation of the root development of the third molar - also known as the wisdom tooth - and it's interaction with the alveolar nerve. This assessment is both time consuming and prone to error from the clinicians when not partnererd with more informative imaging methods.

Part of the efforts of this project is to develop semi-supervised machine learning algorithms that rely in the great ammount of unannotted images to automate this process. For that we are using object detection algorithms for automatically identifying the region surrounding the third molar and the alveolar nerve, and contrastive learning based approachs for performing the classification task in a limited labbled data setting.

Detection of carotid artery calcification

Another use for OPGs outside dental care is the detection of arterial carotid plaque (ACP). ACP is a pathology which constitutes one of the major etiological factors of stroke, with an existing direct relationship between the amount of ACP and the risk of stroke. Due to the severe risk of permanent disabilities of stroke patients, whenever ACP is detected in an OPG, the patient needs to be immediately referred to additional non-invasive screening methods and depending on the degree of stenosis resultant from the ACP, subjected to conservative or surgical therapeutics.

Unfortunately, in daily clinical practice, the carotid artery region is under-examined by dental practitioners, leading to ACP staying widely undetected. In this sub-project we will be automating the screening task for ACP in OGPs through a combination of supervised classification and anomaly detection based methods.

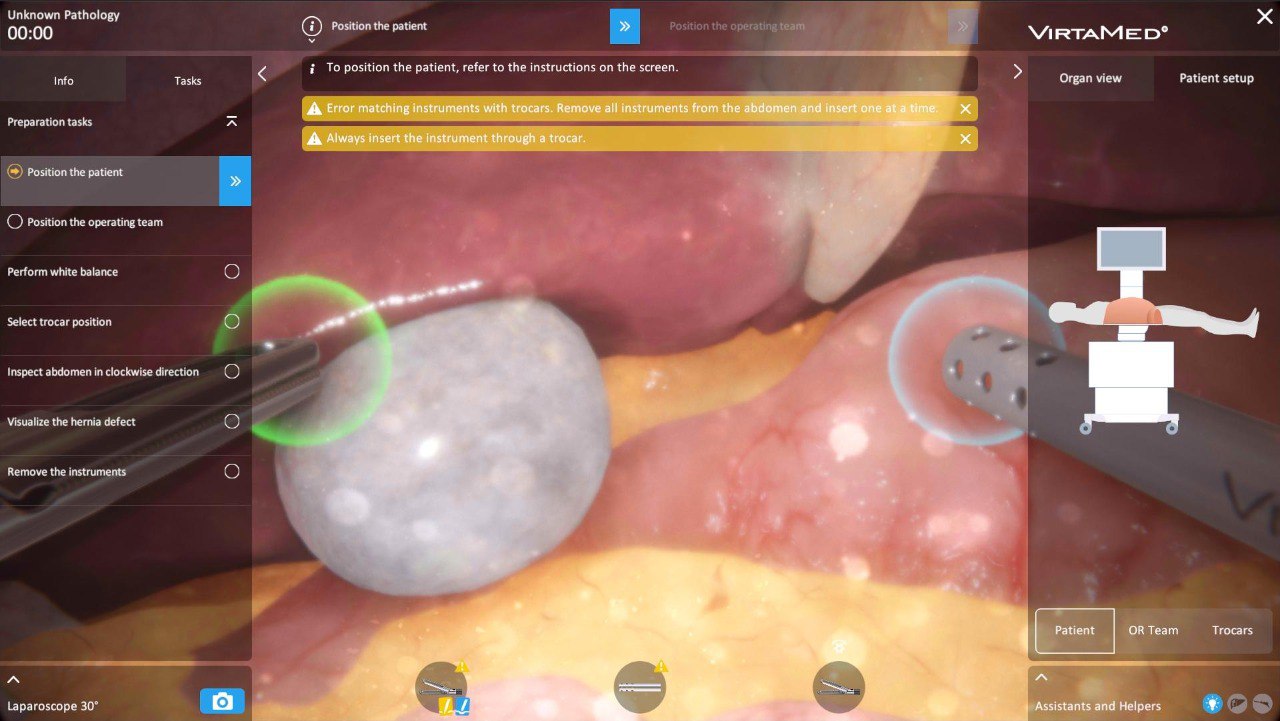

Surgical Performance Evaluation

Teaching complex surgical skills in a more efficient and rigorous manner can have important implications for the quality of overall healthcare. Augmented reality surgical simulators provide an effective training platform in the formation process of surgical residents. We model the behaviour of surgical trainees in order to adapt the teaching process based on expert data gathered using the simulators. A suitable way of modeling this behaviour is to consider a sequential decision making learning agent interacting with the simulated surgical environment, which is studied under the reinforcement learning formalism. The project studies the elicitation of surgical decision making behaviour in both the forward and inverse RL settings for the purpose of teaching assistance policies and evaluation schemes of trainees grounded in expert data.

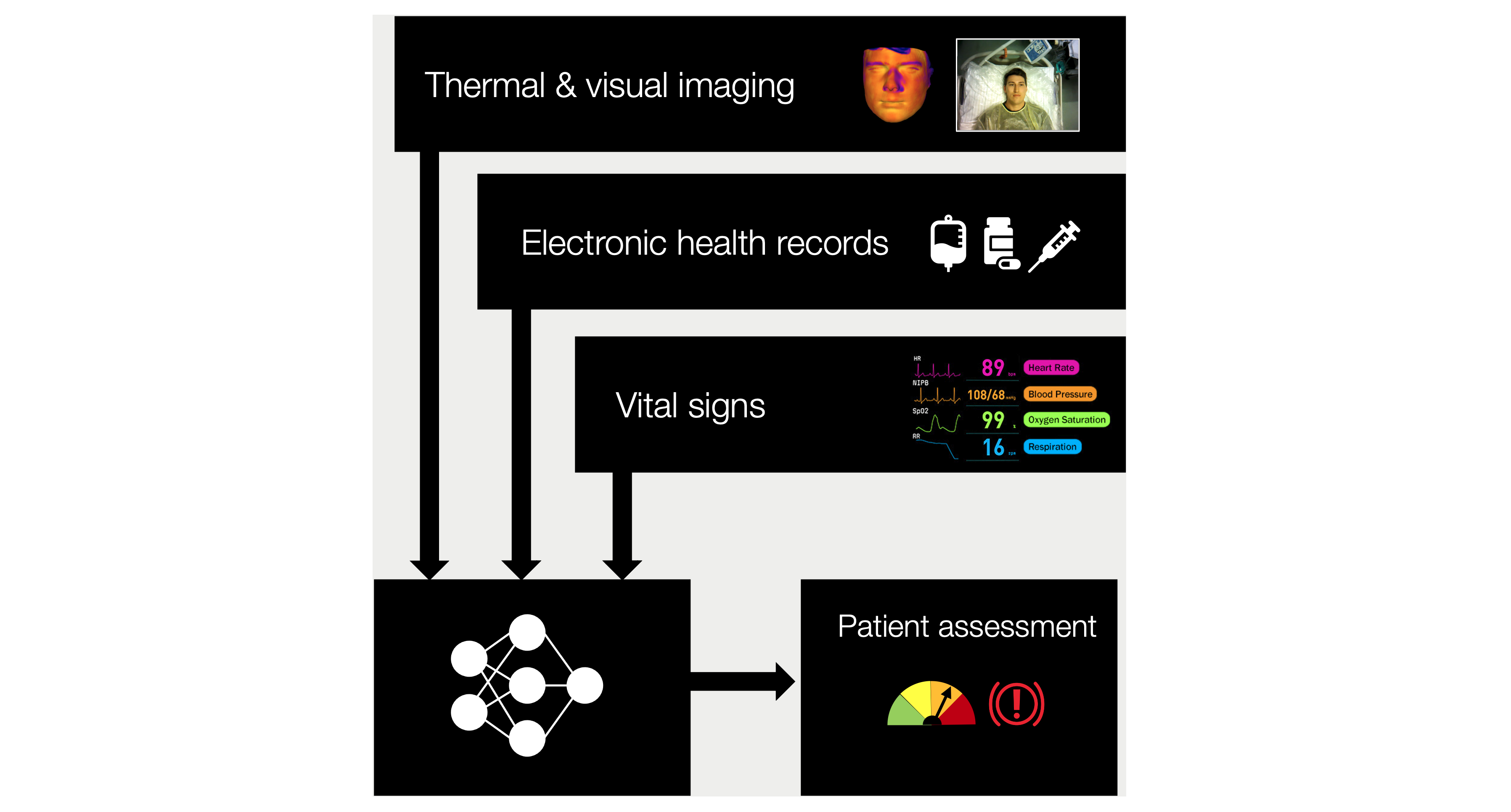

ICU Surveillance

Machine learning based early complication detection in intensive care is meant to enable medical professionals to focus on a patient sooner and start lifesaving treatment or drug administration earlier. Most related research is based on routinely stored data in electronic health records because this data is readily available and there is a low adaption barrier for systems relying on it. However, electronic health records are designed for differing purposes, such as documentation, billing, and liability. A large portion of data which medical professionals rely on, is currently not available for machine learning researchers. This project aims to close the gap between data availability and need. As an interdisciplinary team of machine learning engineers and medical doctors, we designed an open source multi-modal patient monitoring and recording platform for high dimensional real-time clinical phenotype surveillance and research in critical care. The data collected consists of:

- Multi-spectral imaging of patients' faces and upper bodies

- High frequency wave forms of vital parameters: electrocardiogram (ECG), arterial blood pressure (ABP) and the plethysmographic wave curve (PLETH) as measured by the pulse oximeter

- The routinely collected information as recorded in the electronic health records

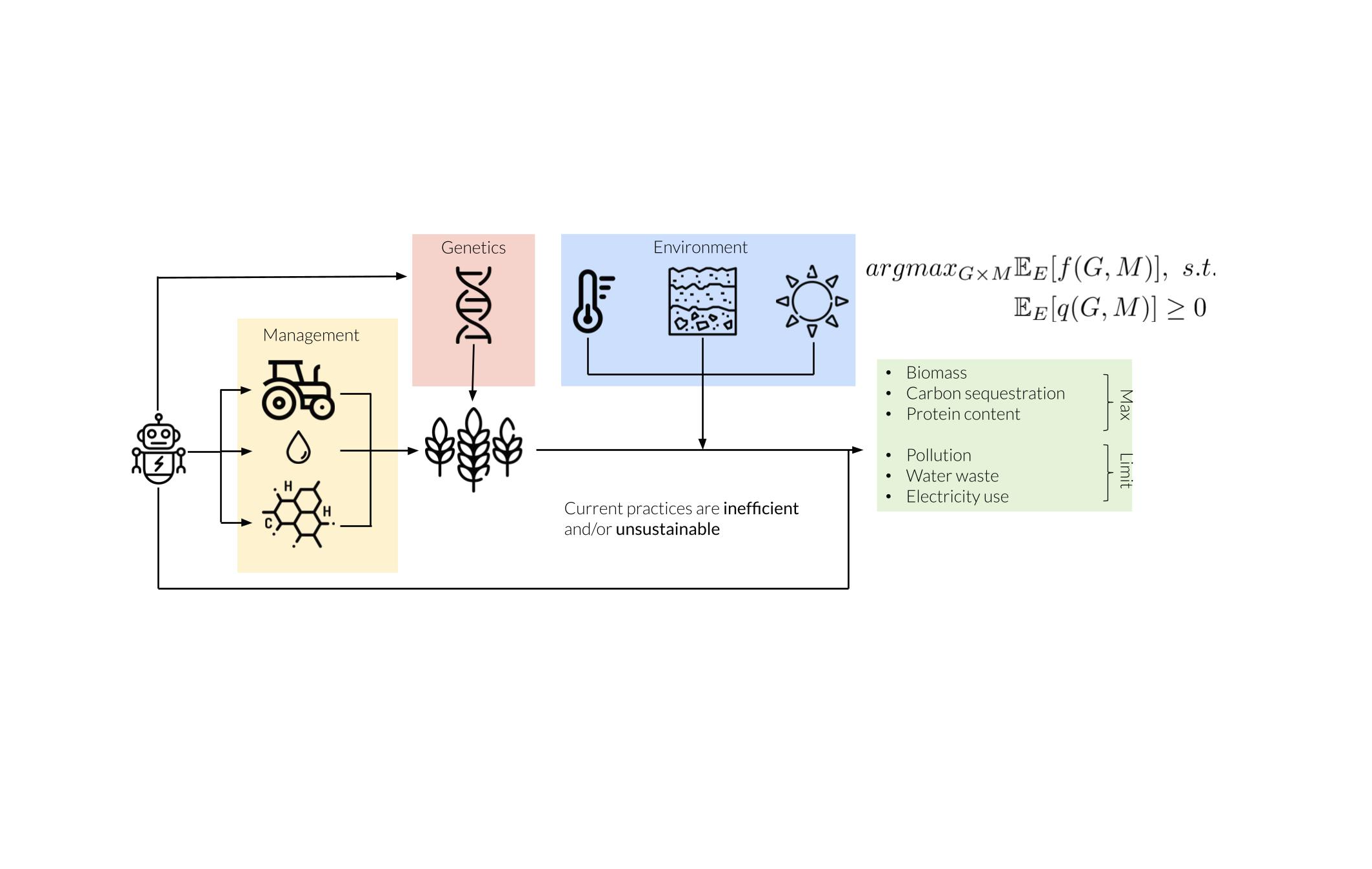

ML for Sustainable Agriculture

Farming systems of the future will need to feed an ever-growing population that is increasingly concentrated in urban areas. To add to the challenge, this must be accomplished while reducing environmental impact and despite increasingly extreme weather conditions. Encouragingly, significant amounts of data are being collected on agricultural processes at all scales, from the performance of different crop varieties to the outcomes of farm management practices. Using this data to tackle the challenges in agriculture will depend in part on machine learning algorithms that translate this data into actionable understanding. The high-level idea of the project is to use machine learning techniques to learn the genomic, environment and management interactions that regulate crop growth. The learned functional dependencies are used then to optimize the genetic and the management aspects using new tools from reinforcement learning.

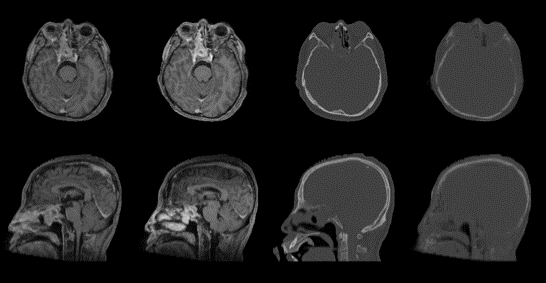

MR-based synthetic CT

Conventionally, Computer Tomography (CT) is the primary imaging modality for dose planning of radiotherapy. Although Magnetic Resonance Image (MRI) is also acquired during the pre-treatment phase, it is mainly used for the delineation of tumors and organs at risk. However, the possibility of calculating proton dose distributions and further optimizing treatment plans directly using MRI would bring many benefits and opportunities. In particular, direct planning on MRI would 1) reduce imaging dose from daily re-planning 2) reduce image artifacts due to the presence of metal implants and 3) eliminate the inherent registration errors associated with propagating contours from MRI to CT. The deep learning based-method considers image synthesis as an image intensity mapping problem, which builds up the nonlinear relationships between MRI and CT using regression techniques.

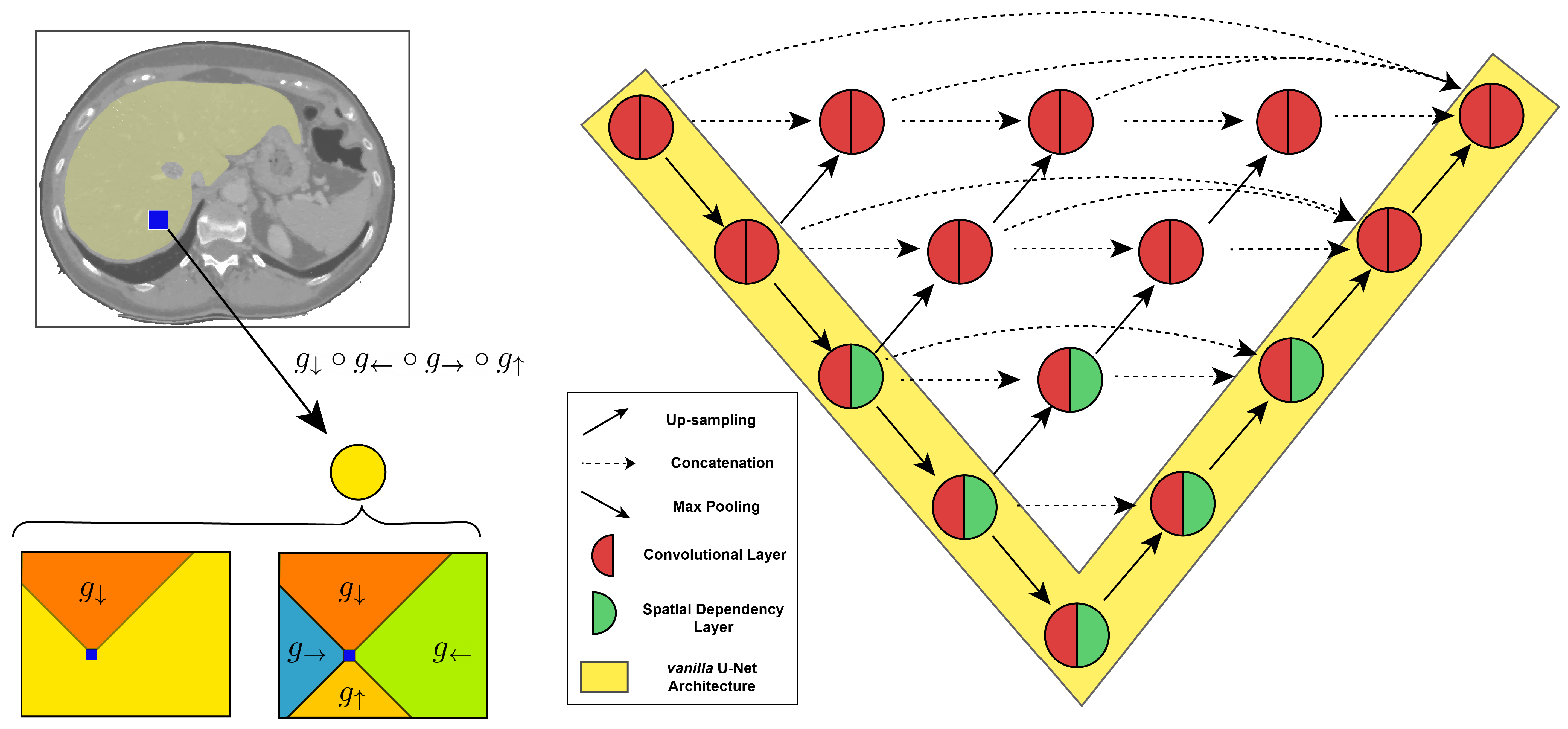

Holistic Medical Image Segmentation

For medical image segmentation to generalize we need two components: the identification of local descriptions, but at the same time the development of a holistic representation of the image that captures long-range spatial dependencies. We demonstrate that the start of the art does not achieve the latter.

So far we have introduced a novel deep neural network architecture endowed with spatial recurrence, able to achieve holistic image representation. The implementation relies on gated recurrent units, and in particular on spatial dependency layers, that directionally traverse the feature map, greatly increasing each layers receptive field and explicitly modeling non-adjacent relationships between pixel.

Current efforts in the project are directed to extending the current approach to 3D medical images and additional imaging types.

Highlighted Focus Areas

Explore our Focus Areas

We study the statistical and algorithmic principles behind learning from data. One key issue is the notion of complexity, and how to control it while finding a model that is in agreement with the observed measurements. We focus our efforts on structured data, investigating approaches such as clustering, graphical models and dynamical systems. A major effort of our algorithmic and modeling work is devoted to quantify the robustness of the learned structures, i.e., to provide the data analyst with uncertainty estimates of models and methods.

One of the factors limiting a more widespread adoption of reinforcement learning techniques is the design of an appropriate reward function that provides the weak supervision signal to the learning agent. A principled approach to this problem is the field of inverse reinforcement learning, which tackles the issue by inferring the reward function from recorded demonstrations performed by experts assumed to be acting optimally in a particular environment, thus performing the desired behaviour. One of the flaws of this approach is the fact that there is typically a large set of ambiguous reward functions which equivalently describe the observed expert behaviour. A common way to circumvent this ambiguity is to consider maximum entropy models of reward, which allow a certain degree of disambiguation by imposing the maximum entropy objective on the policies induced by the reward function. While this approach ameliorates the situation, imposing further constraints on the representations may be of use. There is a number of outstanding challenges associated with learning expressive reward functions, both theoretical and in the application domain. These models typically assume the demonstrated behaviour to be near-optimal. This assumption is often too strong to be applied to the available data, which typically reflects a large variety of suboptimal behaviour due to noise. In order for such data to be useful, we need to model this suboptimality in a principled manner. In addition to leading to more robust reward functions by providing better disambiguation, the modeling of suboptimality in an explicit manner allows for an improved understanding of the expert behavioural patterns.

Identifying the right invariance that allows for generalization to utterly unseen domains is crucial for robust deployment of the models in practice and combating distributional shift. We study invariant structures in the solution space that enable knowledge transfer.

Interpretable ML aims to render model behaviour understandable by humans, which lies at the heart of human-machine interaction. We are utilizing these explanations for model debugging & fairness, robustness and knowledge discovery. Further, we critically validate and study interpretable models and post-hoc explainable methods, enhancing their faithfulness and usefulness.

Previous Projects

Statistical Learning Theory

We prove that a time series satisfies a (linear) multivariate autoregressive moving average (VARMA) model in both time directions if all innovations are normally distributed. This reversibility breaks down if the innovations are non-Gaussian. This means that under the assumption of a VARMA process with non-Gaussian noise, the arrow of time becomes detectable.

Many problems demand that discrete structures like rankings, trees, or graphs be classified or clustered based on similarity. The aim of this work is to research inference on discrete data structures, with the goal of obtaining an hierarchical model family capturing the discrete nature of the data and to derive robust optimisation techniques. The expected contributions include both a theoretical understanding of inference on discrete data structures and a viable model toolkit. From a theoretical point of view, it contributes to an understanding of the relation between model complexity and algorithmic complexity. How does inference on discrete data/combinatorial objects behave in terms of robustness, consistency, and stability? Notions of complexity. From an application’s perspective, the enabling technology to analyse discrete data is fruitful in areas such diverse as Economics (e.g. rankings in preference modelling), the Social Sciences (e.g. consensus finding) as well as the Natural Sciences (e.g. large scale graph data in proteomics).

Machine Learning and Neuroimaging

Parkinson is a neurodegenerative disease which includes a wide spectrum of symptoms. In order to find the appropriate therapy for one individual, it is necessary to determine the Parkinson disease subtype at hand. For this purpose we are perfoming model based clustering on Magnetic Resonance Images (MRI) and combine it with clinical data.

Today the diagnosis of dementia is mostly made only after noticeable progress of the disease. For this reason it is highly desireable to have a reliable predictor of a person's cognitive development. Using multimodal Magnetic Resonance Imaging we are building a multivariate classifier that predicts an elder person's risk of developing dementia.

Alzheimer disease progression can be physiologically quantified by the number and size of so-called senile plaques in mice brains. Automatically detecting, counting and analysing spatial distribution of these plaques is a crucial step towards the development of a pipeline to test new types of treatments.

We analyse Electron Microscopy images of extremely high resolution to automatically build a whole 3d geometrical model of neurons and connections between them. Automatisation is essential in this area to allow high-scale experiments with thousands of neurons. For that we develop specialised Computer vision and Machine learning techniques, based on conventional methods such as Graphical models, Conditional Markov fields, Feature learning, Random forests, Deep learning and Flow estimation techniques.

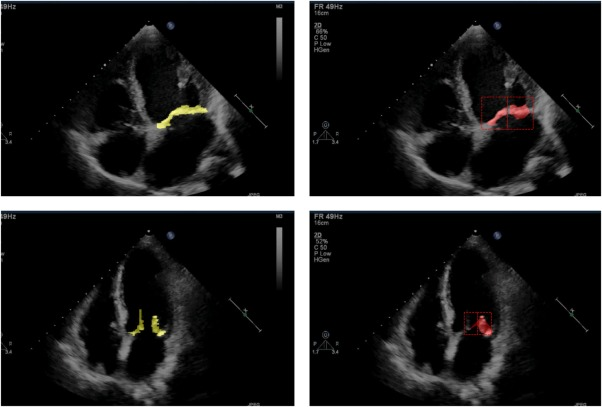

Medical Image Processing

The segmentation of the mitral valve annulus and leaflets specifies a crucial first step to establish a machine learning pipeline that can support physicians in performing multiple tasks, e.g. diagnosis of mitral valve diseases, surgical planning, and intraoperative procedures. Current methods for mitral valve segmentation on 2D echocardiography videos require extensive interaction with annotators and perform poorly on low-quality and noisy videos. We propose an automated and unsupervised method for the mitral valve segmentation based on a low dimensional embedding of the echocardiography videos using neural network collaborative filtering. The method is evaluated in a collection of echocardiography videos of patients with a variety of mitral valve diseases, and additionally on an independent test cohort. It outperforms state-of-the-art unsupervised and supervised methods on low-quality videos or in the case of sparse annotation.

Link to Publication

Link to Publication

The pathology of tissue micro arrays (TMA) plays a big role in cancer research and personalized medicine. The assessment of TMAs comprises not only cell nucleus detection and classification into benign and malignant cases. Also the judgment of the morphological tissue structure and the estimation of immunohistochemical protein stainings are important aspects in this field. A computational framework for these task would extremely facilitate and support the daily worklfow of pathologists, who judge several dozens of such images per day. Since TMA images vary a lot between tissues, protocols and experimental settings, the automatic workflow would also introduce objectivity, reproducibility and stability in the image interpretation. On the other hand, medical tissue images are highly complex not easy to be handled by computer vision methods.

Computer Vision

Many problems in Computer Vision can be formulated with some prior knowledge from experts to be incorporated. This can be either very specific knowledge for specialised tasks or just common sense.

Examples are:

- desired properties of transformation invariance (such as rotation, scale, etc.)

- connection between data samples (smooth transition from one slice to another)

- specific object distribution properties (such as shape and size priors)

We develop new Computer Vision and Machine Learning algorithms that allow incorporating these constraints and priors, and therefore improve the resulting algorithm for a specific problem comparing to general-purpose algorithms.

In this project we consider discriminative models for problems that have an underlying structure, i.e. we overcome the traditional independence assumption present in many discriminative models. In our setting the structure is specified by a graphical model and might correspond to a grid for image segmentation in computer vision or a complete graph for multilabel classification. One important research focus is approximate training of these models, which is needed as these models are computationally intractable in general. Furthermore, we are interested in applications to vision problems.

This project is dedicated to integrating approximate geometry inference and object segmentation and recognition. The main challenges lie in constructing a feature transform that is adequate to both tasks and devising a learning algorithm that can exploit the relation of tasks that are different, but defined on same domain.

Applied Machine Learning

The project aims to provide a method for safety monitoring of trains in the SBB network. It focuses on automatically identifying wheel defects on freight trains based on the existing SBB infrastructure.

The method is expected to provide within-class and overall wheel defect rankings. This goal involves adapting and extending current machine learning methods such as multiple instance learning, semi-supervised classification and simultaneous regression and ranking.

Understanding sleep and its perturbation by environment, mutation, or medication is a key problem in biomedical research. To accelerate scientific discovery, we present an online platform for high-throughput animal sleep scoring.

Link to the platform

Scalable Machine Learning

Similar to the famous and well-known "heart attack" (ACS, acute coronary syndrome), the so called "broken heart syndrome" (TTS, takotsubo cardiomyopathy syndrome) reveals itself with chest pains, irradiating into the chin and arms — and thus is difficult to distinguish. However, the ability to quickly and reliably tell the two apart is of extreme importance to intensive care unit doctors, since the conditions sometimes will require fully opposing therapies, not to mention that in a heart attack, there are only minutes to react.

Group Collaborators and Affiliates